An engineering deep dive into how Generative AI maps phonemes to visemes to create seamless video dubbing.

Have you ever watched a dubbed movie where the actor's lips move completely out of sync with the spoken words? It's distracting and ruins the immersion. This is the "bad dubbing" effect that has plagued localized content for decades.

Today, Generative AI is solving this problem with Lip-Sync AI—a sophisticated technology that modifies the visual video frames to match the new audio track seamlessly.

But how does it actually work? Let's dive into the machine learning and engineering architecture behind this magic.

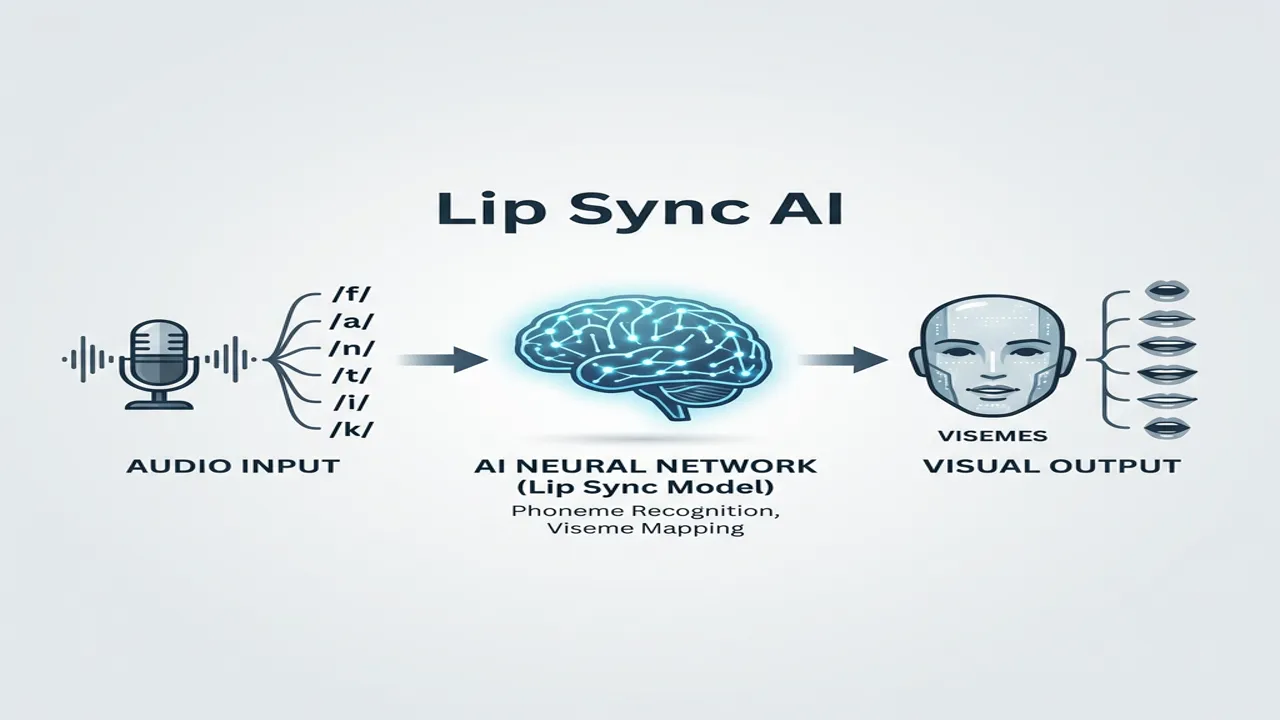

The mechanism of Lip-Sync AI

At its heart, AI lip-syncing is a computer vision and audio processing challenge. It uses deep learning models, specifically Generative Adversarial Networks (GANs), to reconstruct facial movements. Here is the step-by-step engineering pipeline:

The first step is face detection. The AI scans every frame of the video to locate the speaker's face. It then maps out facial landmarks—distinct points around the eyes, nose, jawline, and most importantly, the lips.

Simultaneously, the AI analyzes the new audio track (the translated voice). It breaks the speech down into phonemes—the distinct units of sound (like the "b" in "bat" or "th" in "thing").

This is the crux of the synchronization. A viseme is the visual shape the mouth makes when producing a phoneme.

Once the new mouth shape is determined for each frame, the AI must render it onto the original video. It's not just pasting a mouth; it's reconstructing the lower face.

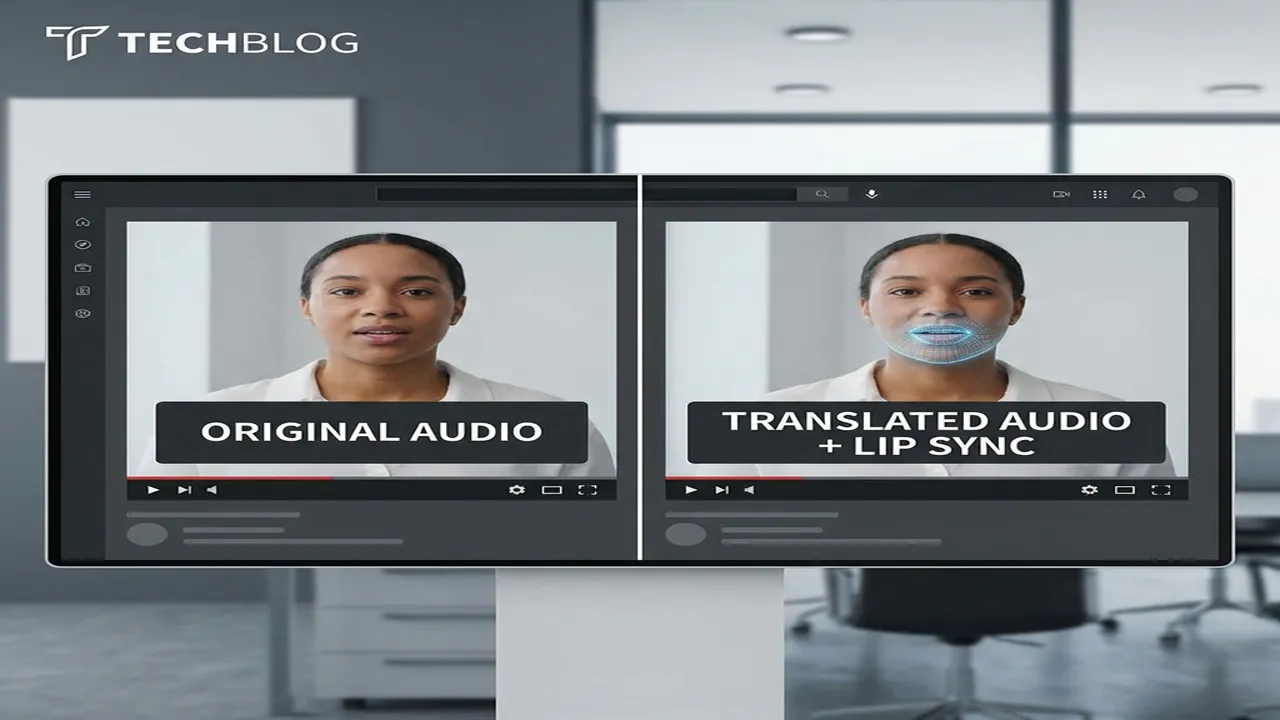

VideoDubber Lip-Sync Comparison

Early lip-sync tech felt robotic. The mouth would move, but the rest of the face remained frozen. Modern engineering, like the technology used by VideoDubber, incorporates:

While open-source models like Wav2Lip provided a foundation, enterprise-grade solutions require much more stability. VideoDubber utilizes an advanced, proprietary pipeline that offers:

For brands and creators looking to expand globally, this visual translation is just as important as linguistic translation. It builds trust and keeps viewers engaged.

Ready to try it? Experience the best AI lip-sync technology with VideoDubber today.